The recent breakthrough by DeepSeek, a Chinese artificial intelligence (AI) firm, has sparked a significant debate in both the technology and energy sectors. DeepSeek has introduced a new open-source AI model that is claimed to be more cost-efficient and energy-efficient compared to those developed by leading U.S. companies like OpenAI and Anthropic. This development has notably impacted the market, particularly influencing technology and energy stocks. The central question now revolves around whether this innovation will ultimately lead to a decrease or an increase in energy demand within the AI sector.

Market Reaction and Efficiency Claims

Initial Market Response

DeepSeek’s announcement about its new AI model immediately triggered a substantial market reaction. Technology stocks, especially those of companies like Nvidia, and energy stocks experienced a decline due to investors speculating on a potential reduction in energy demand across the AI sector. Investors seemed to be reacting to the cost-efficiency aspect of the model, appreciating the fact that DeepSeek managed to develop it with an investment of around $5.5 million, a stark contrast to the hundreds of millions typically spent by its competitors. This disparity in development costs underscored a significant shift in the AI landscape, suggesting that achieving advanced AI capabilities might not be exclusively reserved for the most financially potent firms.

Efficiency and Cost-Effectiveness

DeepSeek’s claims of efficiency and cost-effectiveness have fueled much of the discussion surrounding its new AI model. The ability to produce an advanced AI system at a fraction of the cost traditionally associated with such endeavors has raised hopes for democratizing access to cutting-edge AI technologies. This could potentially enable smaller companies and industries to leverage sophisticated AI tools that were previously out of reach. However, the true measure of the model’s energy efficiency remains a point of contention. While the initial development costs are lower, it is essential to consider the operational energy requirements of AI models, especially as they scale up for broader use.

Contrasting Perspectives on Energy Demand

Climate Advocates’ Optimism

From the viewpoint of climate advocates, DeepSeek’s breakthrough presents a promising step toward achieving reduced energy consumption within the AI sector. The potential for creating more energy-efficient AI models aligns well with broader goals aimed at minimizing carbon footprints and promoting sustainable technological practices. Advocates envision a future where AI advancements not only drive innovation but do so without exacerbating existing environmental issues. The expectation is that more efficient models would directly translate to lower energy consumption, thus contributing to global efforts in combating climate change and transitioning to cleaner, greener energy sources.

Counter-Arguments from Analysts

However, not everyone shares this optimistic outlook. Many analysts, venture capitalists, and AI enthusiasts argue that the introduction of cheaper AI could significantly drive up demand across various industries and sectors. This escalating demand might counterbalance any initial gains made in energy efficiency. They highlight the economic theory known as Jevons Paradox, which suggests that improvements in efficiency often lead to increased overall consumption. In the context of AI, making AI models more cost-effective and efficient might spur broader adoption, thereby amplifying the total energy demand. This perspective brings to light the potential complexities and unintended consequences of scaling AI technologies, particularly in terms of resource consumption and energy footprint.

Jevons Paradox and AI Energy Consumption

Understanding Jevons Paradox

Jevons Paradox, an economic concept dating back to the Victorian era, posits that increases in efficiency that lower the cost of using a resource can lead to an expansion in its overall usage. This theory gains particular relevance when applied to modern technological advancements such as AI. The paradox suggests that the cost-efficiency introduced by DeepSeek’s AI model could inadvertently result in a wider adoption of AI technologies. As a result, total energy consumption might rise despite the improved efficiency of individual AI models. This counterintuitive outcome underscores the complexities involved in predicting the long-term energy impacts of supposedly more efficient technologies.

Implications for AI Sector

The potential application of Jevons Paradox to the AI sector raises crucial questions about the long-term energy implications of DeepSeek’s technological breakthrough. While the development of the model was achieved at a lower cost, this does not necessarily equate to lower operational energy requirements. AI models, to function optimally, need to make inferences or predictions, a task that demands significant energy. As AI models advance in their complexity and capabilities, the energy required to make these inferences is expected to increase. This projection casts uncertainty over the net energy impact of DeepSeek’s new model, particularly as its adoption grows and becomes more widespread.

Energy Consumption During Inference

Training vs. Inference Energy Requirements

Even though DeepSeek’s model was developed with considerable cost efficiency, translating those savings into reduced operational energy requirements remains a challenge. The distinction between the energy required for training AI models and the energy needed for making inferences is critical. Training involves the initial development phase, where models are fed vast amounts of data to learn and develop predictive capabilities. While training energy expenditures can be massive, ongoing operations require models to continuously make inferences, a process that becomes increasingly energy-intensive as models grow more advanced and are employed in diverse, real-time applications.

Future Energy Impacts

The long-term energy impacts of AI models will ultimately hinge on their usage patterns and the energy demands of making inferences. As AI technologies become further ingrained in various industries, dependence on these inferences will undoubtedly increase, potentially driving up overall energy consumption. This dynamic creates a layer of complexity when assessing the true energy impact of DeepSeek’s breakthrough. Despite the potential for cost and energy savings during the initial training phase, the operational energy footprint could surge, particularly as AI applications expand across different sectors and industries.

Domestic Response and Innovation

Competitive Pressure on Domestic AI Companies

DeepSeek’s landmark innovation is poised to trigger a rapid and competitive response from domestic AI companies. Faced with the emergence of a highly efficient and cost-effective rival, these companies will likely be pushed to innovate and develop their own new, efficient AI models. The ensuing competitive pressure has the potential to drive significant advancements in AI technology, yielding models that are not only more capable but possibly more energy-efficient as well. This innovation race could lead to the realization of smarter technologies, enhancing operational efficiencies across the board.

Potential Outcomes of Innovation

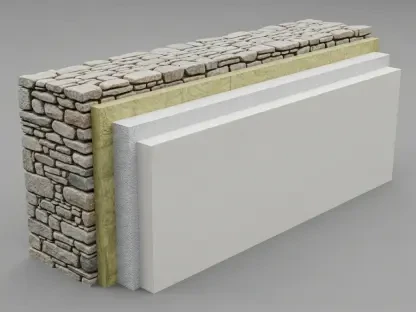

The trajectory domestic companies take in response to DeepSeek’s breakthrough will play a pivotal role in shaping the future energy demands of the AI sector. If the efforts focus on developing energy-efficient technologies, there could be a tangible reduction in overall energy consumption. Conversely, if the industry’s response leans toward aggressive scaling and increased investments in energy-hungry infrastructure, the net energy consumption could rise. The dual paths outline a scenario where the direction and speed of innovation will significantly influence the environmental impact of advancing AI technologies, underscoring the need for a balanced and strategic approach to future developments.

Possible Futures of AI Energy Demand

Scenario 1: AI and Renewable Energy Scalability

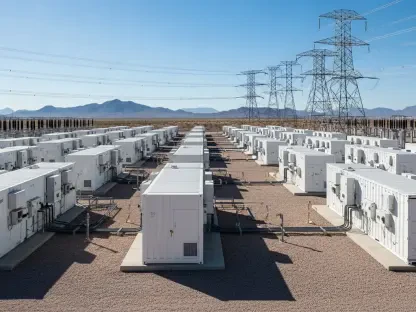

An ideal scenario envisions a future where the growth in AI demand is synchronized with the scalability of nuclear and renewable energy sources. In this scenario, the acceleration of AI technologies would be matched by an equally robust expansion of clean energy infrastructures. This alignment could effectively mitigate the environmental impact of increased AI usage, promoting a sustainable and technologically advanced future. The benefits of such a scenario would not only include reduced carbon footprints but also enhanced energy efficiencies that support broader societal benefits, including more reliable and resilient energy systems.

Scenario 2: Increased Reliance on Fossil Fuels

In a less optimistic scenario, an exponential increase in AI demand could lead to greater reliance on fossil fuels, necessitating the development of significant new energy infrastructures. This growth trajectory presents long-term environmental challenges, as the carbon footprint of AI technologies could increase substantially. The reliance on traditional energy sources might overshadow the efficiency gains achieved by new AI models, leading to an overall increase in resource consumption and environmental degradation. This path underscores the risks associated with unchecked technological growth, particularly when it is not accompanied by parallel advancements in sustainable energy solutions.

Scenario 3: Techno-Optimist Future

The recent achievements by DeepSeek, a Chinese artificial intelligence (AI) company, have ignited considerable discussion in the technology and energy industries. DeepSeek has unveiled a new open-source AI model, touted to be more cost-effective and energy-efficient compared to those from major U.S. firms like OpenAI and Anthropic. This breakthrough has had a notable impact on the market, particularly affecting technology and energy stocks. The primary issue now is whether this innovation will lead to a reduction or an increase in energy demand within the AI industry.

Considering DeepSeek’s claim of enhanced energy efficiency, there is optimism that such advancements could lessen the AI sector’s carbon footprint. On the other hand, the adoption of more efficient AI models could drive wider use, potentially escalating overall energy consumption. As these new models become more accessible, they might attract a broader range of users, increasing the load on data centers and other infrastructure.

Financial markets have reacted to DeepSeek’s announcement with mixed emotions, reflecting both hope and uncertainty. Technology stocks have felt the positive impact, while energy stocks exhibit varied responses based on potential shifts in demand patterns.

Ultimately, the real-world implications of DeepSeek’s AI model on energy consumption remain to be seen. As the technology matures and integrates into existing systems, the AI industry will keep a close eye on these developments to gauge their long-term effects.