The artificial intelligence (AI) revolution has captivated the world with its dazzling digital advancements—think lightning-fast chips, intricate algorithms, and seemingly endless streams of data driving innovation across industries. Yet, beneath this high-tech surface lies a less celebrated but equally vital component: the physical infrastructure that makes AI possible. Power grids, data centers, cooling systems, and transmission networks are straining under the weight of AI’s relentless growth, revealing a challenge that’s as much about concrete and cables as it is about code. The energy demands are staggering, and existing systems are often ill-equipped for the task. This exploration delves into the critical role of smarter infrastructure in sustaining AI’s trajectory, examining how physical systems are becoming the backbone of technological progress and a new frontier for economic opportunity in a rapidly evolving digital landscape.

The Energy Crisis Fueling AI Expansion

The meteoric rise of AI technologies is creating an insatiable demand for electricity, placing immense pressure on global energy resources. Data centers, the beating heart of AI operations, are consuming a significant portion of the world’s power, with projections indicating that this usage could triple within the next ten years. To put this into perspective, meeting such demand might require the equivalent of hundreds of new large-scale power stations over the coming decade, from now through 2035. This isn’t merely a matter of plugging in more servers; it’s a complex issue that intersects with energy security, geopolitical stability, and urban development. Governments and corporations alike are grappling with how to scale power generation without destabilizing grids or exacerbating environmental concerns, making this a pivotal challenge for the digital age that demands both immediate action and long-term strategic planning.

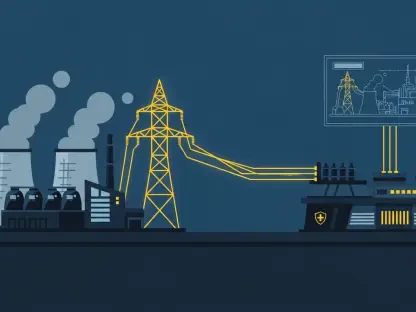

Beyond the sheer volume of energy required, the unpredictability of AI’s growth adds another layer of complexity to the energy crisis. Unlike traditional industries with more predictable consumption patterns, AI workloads can spike dramatically with new model training or widespread adoption of applications. This volatility strains existing power infrastructure, often leading to inefficiencies or outages in regions unprepared for such surges. Additionally, the concentration of data centers in specific geographic areas creates localized energy hotspots, further stressing regional grids. Addressing this requires not just more power, but a rethinking of how energy is distributed and managed. Innovative approaches, such as microgrids or demand-response systems, are being explored to balance loads more effectively, ensuring that AI’s energy appetite doesn’t outpace the ability to supply it sustainably across diverse global markets.

Overcoming Infrastructure Limitations with Innovation

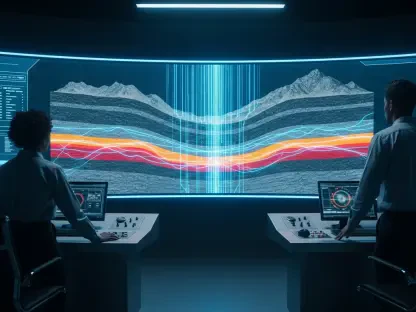

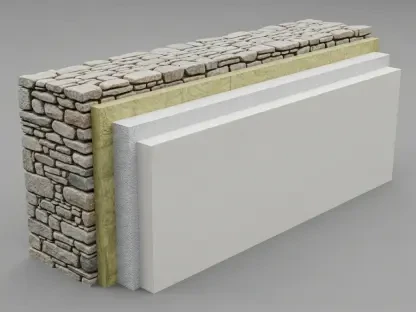

Current infrastructure, much of it designed for a bygone era of computing, is proving inadequate for the unique demands of modern AI systems. Power grids, originally built for steady, predictable loads, falter under the intense, fluctuating needs of data centers, while traditional cooling methods struggle to manage the heat generated by high-performance servers. Land availability for new facilities is also becoming a constraint, especially in densely populated or strategically critical areas. These bottlenecks threaten to slow AI’s progress unless addressed with urgency. The good news is that the industry is responding not by simply scaling up outdated systems, but by reimagining them. Smarter designs and technologies are being deployed to bridge the gap between what exists and what AI requires, turning limitations into a catalyst for groundbreaking advancements in infrastructure.

A key focus of this transformation is efficiency through cutting-edge innovation. Liquid cooling systems, for instance, are gaining traction as a more energy-efficient alternative to traditional air cooling, significantly reducing the power needed to keep servers at optimal temperatures. Meanwhile, some forward-thinking companies are repurposing waste heat from data centers for practical uses, such as heating nearby communities, thereby cutting energy waste. Digital tools, like virtual simulations of data center operations, are also playing a crucial role by allowing operators to predict and optimize energy usage in real time. These advancements highlight a shift in mindset: rather than just building more, the emphasis is on building better. By prioritizing efficiency and adaptability, the industry is finding ways to support AI’s growth without being constrained by the physical limitations of yesterday’s infrastructure.

Redefining Value in the Digital Age

As AI reshapes global markets, attention is increasingly turning from the headline-grabbing tech giants to the less visible but equally essential companies that power their operations. Firms specializing in critical infrastructure components—such as high-capacity cables, advanced power conversion systems, innovative cooling technologies, and robust grid connectivity solutions—are stepping into the spotlight. Their role is becoming indispensable as AI systems demand ever more reliable and efficient physical support. Evidence of this shift can be seen in the expanding order books and procurement pipelines of these businesses, which signal strong market confidence in their importance. This trend underscores a broader realization: the digital economy’s next chapter will hinge as much on who can sustain the underlying systems as on who develops the next groundbreaking algorithm or application.

This redefinition of value extends to investment landscapes, where infrastructure providers are emerging as a compelling opportunity. Unlike the volatile, hype-driven tech sector, these companies often offer more stable, long-term growth potential, driven by the tangible needs of AI expansion. Their contributions ensure that data centers remain operational, grids stay resilient, and hyperscalers can scale without disruption. Moreover, their work often spans multiple industries, from energy to urban planning, amplifying their economic impact. As tech giants pour trillions into capital expenditures to build and maintain AI capabilities, the ripple effects benefit these behind-the-scenes players. This dynamic reveals a new economic frontier where the mundane—power, cooling, connectivity—becomes a cornerstone of innovation, reshaping perceptions of what drives value in a technology-driven world.

Balancing Growth with Environmental Responsibility

Sustainability has become a non-negotiable factor in the development of AI infrastructure, driven by both environmental imperatives and regulatory frameworks. Major technology companies are committing vast resources to renewable energy sources, with significant capacity already secured to power their operations. In regions like Southeast Asia, where hyperscale projects are on the rise, this push is also accelerating grid modernization and clean energy integration. Such efforts reflect a broader industry trend to align AI’s rapid expansion with global climate goals. The challenge lies in scaling infrastructure to meet soaring demand while minimizing carbon footprints, a balancing act that requires both technological innovation and strategic policy alignment to ensure that growth doesn’t come at the expense of the planet.

Regulatory pressures are further shaping this landscape, as governments worldwide impose stricter guidelines on energy consumption and emissions. These mandates are prompting companies to rethink how they design and operate data centers, prioritizing energy-efficient technologies and sustainable practices. Beyond compliance, there’s a growing recognition that green infrastructure can be a competitive advantage, attracting investment and public goodwill. Projects that integrate solar, wind, or other renewables into power supplies for AI operations are setting new standards for the industry. This convergence of sustainability and technology highlights a critical insight: smarter infrastructure isn’t just about meeting immediate power needs but about crafting systems that support long-term ecological and economic stability, ensuring AI’s benefits are realized responsibly.

Building a Sustainable Path Forward

Reflecting on the journey of AI’s infrastructure challenges, it’s clear that the path blazed over recent years was one of adaptation and foresight. The industry tackled soaring energy demands by embracing efficiency, overcame outdated systems through innovation, and redefined economic value by spotlighting unsung heroes in power and connectivity. Sustainability emerged as a guiding principle, shaping how infrastructure evolved to support AI’s ambitions. Looking ahead, the focus must shift to actionable strategies—scaling renewable energy integration, investing in next-generation cooling and grid technologies, and fostering collaboration between tech leaders and policymakers. These steps will ensure that the physical foundations of AI remain robust and responsible, paving the way for a digital economy that thrives on both innovation and sustainability in equal measure.