As generative AI continues to capture global attention for its potential benefits, from worker productivity enhancements to breakthroughs in scientific research, the rapid deployment of powerful models in numerous industries has raised significant environmental concerns. This article delves into the environmental implications of this burgeoning technology, examining why generative AI is so resource-intensive and exploring expert-driven initiatives aimed at mitigating these environmental impacts.

The excitement around generative AI is palpable; however, its exponential growth presents a sustainability challenge. Generative AI models, such as OpenAI’s GPT-4, often characterized by billions of parameters, require massive computational power for training. This power demand results in significant electricity usage, increased carbon dioxide emissions, and heightened pressure on electrical grids. Even after initial development, the deployment and continuous fine-tuning of these models for real-world applications necessitate substantial and ongoing energy consumption.

The Resource-Intensive Nature of Generative AI

Massive Computational Power Requirements

Generative AI models require extensive computational power for training, which translates to significant electricity usage. The training process for models like OpenAI’s GPT-4 involves billions of parameters, necessitating powerful hardware and prolonged computational efforts. This results in increased carbon dioxide emissions and places additional strain on electrical grids. Beyond the initial training phase, the deployment and continuous fine-tuning of these models for real-world applications demand ongoing energy consumption, further highlighting the environmental impact.

The bulk of the energy expended in training these models stems from the need for high-performance graphics processing units (GPUs) and specialized hardware capable of handling intensive computational tasks. These devices, often running for weeks or even months to train a single model, consume vast amounts of electricity. Consequently, the push for more powerful models results in a constant escalation of energy requirements. The competition to develop more sophisticated AI solutions inevitably leads to higher energy consumption, compounding environmental issues. Addressing these challenges necessitates advances in energy-efficient algorithms and hardware.

Water Usage for Cooling

In addition to electricity, the water needed to cool the hardware involved in training, deploying, and adjusting generative AI models can strain municipal water supplies and disrupt local ecosystems. Chilled water cools data centers by absorbing heat from computing equipment, with estimates suggesting that each kilowatt hour consumed requires two liters of water. This extensive water usage, combined with the physical presence of data centers, has direct and indirect implications for biodiversity.

Data centers can have a significant impact on local water resources, particularly in regions already experiencing water scarcity. The growing number of data centers compounded with an increasing demand for generative AI applications means that water usage continues to rise unabated. This water is often sourced from municipal supplies, competing with local communities and agriculture, leading to potential conflicts over resource allocation. Moreover, the need to sustainably manage this cooling water to minimize its ecological impact becomes critical, necessitating innovative cooling solutions and water conservation practices within the industry.

Environmental Impact of Data Centers

Electricity Consumption and Carbon Footprint

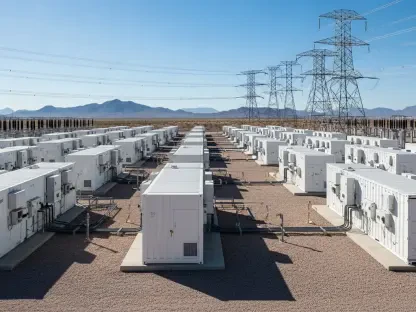

Data centers play a pivotal role in the environmental footprint of generative AI due to their substantial electricity consumption. These facilities, composed of computing infrastructure such as servers, data storage drives, and network equipment, must be kept at controlled temperatures. For instance, Amazon operates over 100 data centers globally, each housing approximately 50,000 servers to support their cloud computing services. As a result, these data centers require a continuous supply of electricity to maintain operational efficiency.

The rise of generative AI has spurred a surge in data center construction. Generative AI requires power density far greater than typical computing workloads. A generative AI training cluster might consume seven to eight times more energy than a conventional computing workload, highlighting the unprecedented energy demands of this technology. This exacerbates the carbon footprint, as many data centers still rely on fossil fuel-based power sources. Consequently, the push for renewable energy in powering data centers becomes a critical component in addressing these environmental concerns.

Growth in Data Center Power Requirements

Recent estimates suggest that the power requirements of North American data centers soared from 2,688 megawatts at the end of 2022 to 5,341 megawatts by the end of 2023, driven in part by generative AI demands. Worldwide, data center electricity consumption reached 460 terawatts in 2022, placing data centers as the 11th largest electricity consumer globally, between Saudi Arabia and France. These figures reflect the increasing reliance on generative AI applications, which necessitate substantial computing resources.

Projections indicate that by 2026, this figure could approach 1,050 terawatts, which would position data centers fifth on the global list, between Japan and Russia. The rapid expansion of new data centers, primarily powered by fossil fuel-based plants, compounds sustainability issues. Efforts to reduce this impact include transitioning to renewable energy sources and optimizing data center efficiency. However, as the demand for generative AI continues to grow, the challenge of balancing environmental sustainability with technological advancements becomes increasingly complex.

Continuous Use and Inference Energy Demands

Persistent Energy Requirements

The energy demands of generative AI models persist beyond their training phase. Every instance of model usage, like a query to ChatGPT, requires energy. Research estimates that a ChatGPT query consumes about five times more electricity than a simple web search. Despite this, the user-friendly interfaces of generative AI and the general lack of information on environmental impacts do not incentivize users to reduce their use. This persistent energy demand underscores the ongoing environmental footprint of generative AI applications.

In traditional AI, energy usage is relatively balanced between data processing, model training, and inference (using a trained model to make predictions). However, in generative AI, the electricity required for inference is expected to dominate due to the ubiquitous and growing applications of these models. As a result, the energy footprint associated with generative AI extends well beyond the initial development phase, requiring sustained energy input for daily operations.

Increasing Complexity and Energy Consumption

As models become larger and more complex, the inference energy will rise correspondingly. Generative AI models tend to have brief lifespans due to high demand for novel AI applications, leading to frequent release of new models that often possess more parameters and consequently require more energy for training. The continuous development and deployment of increasingly complex models exacerbate the environmental impact, highlighting the need for sustainable practices in the generative AI industry.

The increasing size and intricacy of these models also necessitate more substantial computing resources, resulting in further strain on data centers and energy grids. This escalating complexity, while driving advancements in AI capabilities, poses significant challenges in terms of sustainability. Researchers and industry leaders must work together to find innovative solutions that balance the demand for advanced AI models with the urgent need to reduce their environmental impact.

Water Consumption and Hardware Implications

Cooling Data Centers

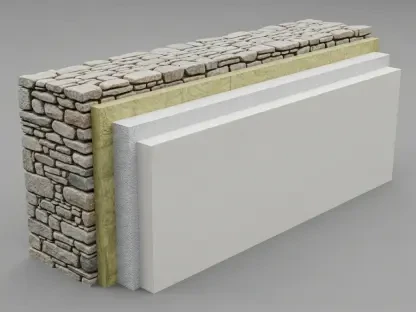

Generative AI deployment demands considerable water for cooling data centers. Chilled water cools these centers by absorbing heat from computing equipment, with estimates suggesting that each kilowatt hour consumed requires two liters of water. This extensive water usage, combined with the extensive physical presence of data centers, has a profound impact on local water resources, particularly in regions experiencing water scarcity.

The environmental impacts of water consumption in generative AI deployment are multifaceted. In addition to straining municipal water supplies, the use of large quantities of water for cooling can lead to thermal pollution if not properly managed. This can disrupt aquatic ecosystems, impacting both biodiversity and water quality. Implementing advanced cooling technologies and water recycling systems are critical steps in mitigating these effects.

Hardware Manufacturing and Carbon Footprint

The environmental impacts extend to the computing hardware itself. GPUs, vital for handling intensive generative AI workloads, entail a more complex and energy-intensive fabrication process than simpler CPUs. This complexity contributes to a substantial carbon footprint, compounded by emissions from material sourcing and transportation. The market for GPUs is expanding rapidly, with TechInsights projecting that the leading producers (NVIDIA, AMD, and Intel) shipped 3.85 million GPUs to data centers in 2023, up from 2.67 million in 2022.

This rapid market growth underscores the unsustainable path of the industry, necessitating a shift towards environmentally responsible development practices. Recycling and repurposing outdated hardware, as well as investing in research for more energy-efficient components, are critical strategies in reducing the environmental impact of generative AI. A comprehensive approach involving the entire supply chain, from raw material extraction to end-of-life management, is essential for fostering sustainability in the AI hardware market.

Towards Sustainable Development and Mitigating Impacts

Addressing the environmental challenges of generative AI requires a comprehensive approach that encompasses all associated costs and benefits. This approach includes evaluating the environmental and societal impacts comprehensively while assessing the perceived benefits of generative AI. Only through a nuanced understanding can we identify the trade-offs and foster responsible development practices. The research by Elsa A. Olivetti and her MIT colleagues, as highlighted in the 2024 paper “The Climate and Sustainability Implications of Generative AI,” underscores the need for systematic and contextual consideration of these implications. The speed of advancements in the field has outpaced the ability to measure and understand these trade-offs adequately.

Conclusion

Generative AI offers transformative potential but brings with it substantial environmental costs. The energy demands of training and deploying models, associated water usage, and the carbon footprint of high-performance computing hardware present significant sustainability challenges. As generative AI continues to permeate various sectors, it is imperative to develop strategies that mitigate its environmental impact. Experts and researchers must focus on a balanced approach that considers both the technology’s promises and pitfalls. By systematically and comprehensively understanding these trade-offs, the generative AI industry can transition towards more sustainable practices, ensuring the benefits of AI advancements do not come at the expense of our planet’s health. As the conversation around generative AI evolves, so too must our strategies for integrating environmental sustainability into the development and deployment of this powerful technology.