Every new rack of servers spinning up state-of-the-art AI models adds invisible weight to the power system, while the very algorithms that drive those models promise to ease that burden by squeezing more value from every kilowatt coursing through wires, buildings, vehicles, factories, and data halls. That tension has become the defining storyline of the energy transition: AI is both an accelerating demand shock and a control technology tailored to the physics, volatility, and scale of a cleaner grid. The stakes are high because long-lived infrastructure, stringent reliability targets, and volatile weather patterns leave little room for trial-and-error. Yet the payoff is equally clear: grid operators gain faster foresight, planners can stress-test futures before steel meets ground, and researchers compress discovery cycles for materials that set the pace of decarbonization. Across programs, laboratories, and partnerships, research and convening efforts have begun to align, treating AI as neither hero nor villain but as an instrument that requires careful orchestration to deliver dependable, affordable, low-carbon power.

AI’s Rising Power Draw

Data centers running training runs and inference at scale are reshaping load profiles, clustering near cheap power and fiber while nudging local networks toward their thermal and capacity limits. In several markets, large AI facilities have already outpaced growth forecasts used to plan substations and feeders, tightening reserve margins and exposing municipal utilities and co-ops to rate pressure. The challenge is not only megawatts but timing: coincident peaks driven by compute clusters can overlap with evening ramps, just as solar output fades and dispatchable capacity thins. Left unmanaged, that alignment raises both emissions and costs. Utilities and developers increasingly consider co-location with renewables and storage, but interconnection queues and siting constraints can stagger deployment. The result is a puzzle of sequencing—power first or load first—and a reminder that demand planning now requires a sharper lens than historical data can offer.

Meeting this surge without backsliding on climate goals hinges on a dual push: curb the energy intensity of computation while modernizing the envelope that delivers it. On the compute side, domain-specific accelerators, sparsity-aware training, mixed-precision arithmetic, and algorithmic pruning lower joules per operation, while job schedulers route flexible workloads to regions with surplus clean power. On the facility side, direct-to-chip liquid cooling, hot-aisle containment, and heat reuse schemes reduce cooling penalties, and power architectures that integrate onsite storage and grid services blunt peak impacts. Siting strategies matter as much as technology choices; proximity to transmission backbones, hydropower, or curtailed wind can reduce overall system stress. Emerging forums dedicated to data center power planning have begun to standardize playbooks across operators and utilities, turning case-by-case negotiations into templates that balance local reliability with national decarbonization targets.

Running a Reliable, Renewable Grid

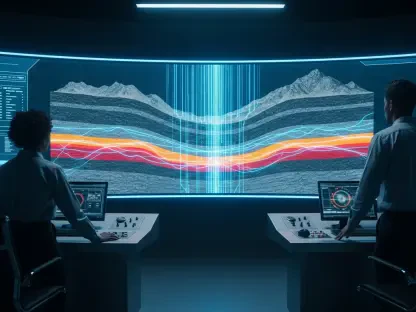

As the share of variable renewables grows, operating the grid shifts from a routine of predictable dispatch to an exercise in continuous estimation and control. Forecast errors in wind and solar output compound with changing demand patterns from electrified heating and transport, creating a dynamic that favors algorithms able to learn, adapt, and act across time scales. An information layer—high-resolution sensing tied to predictive and prescriptive analytics—now sits atop the physical grid, providing guidance for unit commitment, economic dispatch, and corrective actions when contingencies arise. Researchers such as Anuradha Annaswamy have emphasized the need for stability guarantees, ensuring that controllers designed with learning components behave safely even when conditions deviate from training data. Done correctly, AI reduces reliance on spinning reserves, trims curtailment, and enhances voltage and frequency regulation, all while making room for more clean generation without sacrificing the standards that keep lights on.

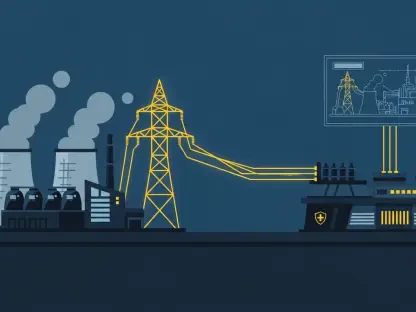

Reliability is not only about matching megawatts; it also hinges on asset health. Predictive maintenance uses data streams from transformers, breakers, inverters, and turbines to spot subtle deviations—harmonic signatures, thermal hotspots, vibration spectra—that precede failure. That early warning lets operators plan interventions during low-demand windows, reduce truck rolls, and prolong equipment life, converting capital-intensive assets into longer-lived, higher-performing components. Beyond hardware, cyber-physical risks demand new vigilance. Anomalies in control traffic can signal intrusions; AI-based detectors can flag and isolate threats before they propagate across protective relays or distributed energy resource aggregations. The productivity gains are tangible: fewer forced outages, safer field work, and better utilization of maintenance crews. In aggregate, these improvements turn reliability from a reactive practice into a proactive discipline, strengthening the backbone of a grid asked to do more with tighter margins.

Turning Demand Into a Resource

The cheapest, fastest “power plant” is flexible demand shaped to the contours of renewable supply, and AI makes that shaping precise, automated, and customer-friendly. Electric vehicles can charge opportunistically when wind surges overnight, or discharge briefly through vehicle-to-grid services during late-day peaks, all while respecting driver schedules and battery health. Smart thermostats pre-cool or pre-heat buildings within comfort bands, shaving demand spikes without drawing notice. Commercial campuses orchestrate chillers, pumps, and storage to track real-time prices and carbon intensity signals. These behaviors require forecasts, optimization, and coordination at granular levels; algorithms synthesize weather data, occupancy patterns, and device constraints to produce small adjustments that add up across thousands or millions of endpoints. The net effect is a flatter load profile, lower system costs, and fewer reasons to fire up peaking plants.

Even facilities that currently symbolize rising demand can contribute flexibility. Data centers already queue workloads, and AI jobs differ widely in latency sensitivity. Non-urgent batch tasks can shift to off-peak hours or migrate to regions with abundant renewables, coordinated by schedulers that weigh power availability, network latency, and carbon intensity. During transmission constraints or extreme weather, clusters can temporarily throttle without compromising critical services, exchanging revenue for grid support in structured demand response programs. Crucially, such participation must be verifiable and reliable; telemetry, baselines, and settlement mechanisms need to be trustworthy to value flexibility fairly. When done well, the payoff flows both ways: operators gain a controllable resource previously treated as fixed, and data centers monetize an operational trait while lowering their emissions footprint. This reframing of demand as a dispatchable partner rather than a passive endpoint expands the toolkit for balancing a cleaner grid.

Planning Under Uncertainty—and Speeding Approval

Planning new generation, transmission, and storage has always been about peering into the future, but today the uncertainty spans not only load growth and fuel prices but also evolving weather regimes and technology learning curves. AI helps planners imitate physics where models are proprietary or incomplete, building surrogate models that approximate equipment behavior with enough fidelity to test thousands of system configurations quickly. Deepjyoti Deka and colleagues point to the erosion of inertia and the rise of distributed assets as core shifts that make past heuristics unreliable. Learning-based tools simulate cascading effects, evaluate reliability metrics under stress, and propose portfolios that meet criteria for adequacy, affordability, and resilience. In practice, this means identifying transmission corridors that unlock multiple renewable sites, sizing storage to absorb forecast errors, and placing flexible demand resources where they deliver the most relief. Speed matters because long queues and contested siting can push critical projects beyond needed timelines.

No plan survives first contact with the permitting process unless it anticipates regulatory expectations and community concerns. Here, AI shortens feedback loops by testing design variants against environmental, routing, and interconnection constraints, producing documentation that answers likely questions before they arise. Language models can parse statutes, summarize precedent, and align filings with agency templates, reducing the risk of avoidable rework. At the same time, safe deployment demands guardrails: interoperability standards to ensure devices communicate reliably; cybersecurity frameworks to contain faults; and data-sharing agreements that protect privacy while enabling performance verification. Regulators benefit from the same tools, using scenario analysis to scrutinize reliability claims and benchmark proposals. By institutionalizing shared modeling environments, agencies, utilities, and developers can converge faster on projects that withstand scrutiny, shaving months or years from approval cycles without diluting public oversight.

Accelerating Discovery and Convening Action at MIT

Progress in energy technologies depends on matter as much as models, and AI is accelerating the march from hypothesis to hardware. In materials research, AI-augmented simulations help map how atomic structures translate into macroscopic performance, guiding the search for electrodes, membranes, absorbers, and alloys with superior stability and efficiency. Ju Li and collaborators demonstrate how active learning can orchestrate closed-loop experiments: literature-mining agents propose candidates, robotic platforms synthesize and measure them, and algorithms update models to focus on promising regions of chemical space. This feedback tightens quickly, turning what once took decades into cycles measured in months. Battery chemistries evolve with fewer dead ends, solar cells approach theoretical limits through tandem architectures tuned by data, and thermoelectrics inch closer to viability where waste heat is abundant. The overall effect is a shift from artisanal discovery to systematic, scalable exploration anchored by reproducible workflows.

Institutional capacity has proved just as important as technical leaps, and dedicated initiatives have taken shape to turn prototypes into systems. Programs focused on fusion use AI to predict and avoid plasma disruptions, moving a step closer to stable operations. Grid-planning efforts fuse climate projections with infrastructure maps to chart investments that hold up under extreme events. Robotics projects teach machines to perform inspections and maintenance tasks in hazardous settings, improving safety and lowering operating costs across wind, solar, and transmission assets. Recognizing that AI’s own footprint must shrink, researchers target chip efficiency, workload scheduling, and facility architecture to cut energy use in data centers. The Data Center Power Forum brought operators and utilities into the same room to tackle grid impacts with pragmatic frameworks, while a symposium on AI and energy in 2025 convenes industry, academia, government, and nonprofits to align priorities and commit resources. Together, these actions grounded ambition in engineering discipline, clarified governance needs, and outlined next steps: accelerate standards for interoperability, scale demand flexibility markets with transparent measurement, embed stability guarantees in AI controllers, and expand shared modeling environments so plans and permits move in weeks, not months.