The rapid proliferation of generative artificial intelligence is transforming a technology once perceived as ethereal into one of the world’s most tangible and voracious consumers of physical resources. Unlike previous digital advancements that often prioritized greater efficiency, the current wave of AI is driven by an insatiable demand for raw computational power, creating an entirely new category of energy consumption. This explosive growth in electricity and water usage is setting up a direct and urgent confrontation between technological progress and global climate objectives, forcing a critical reevaluation of the true cost of innovation in the modern era and whether our energy infrastructure is prepared for the challenge ahead.

The Unprecedented Surge in Demand

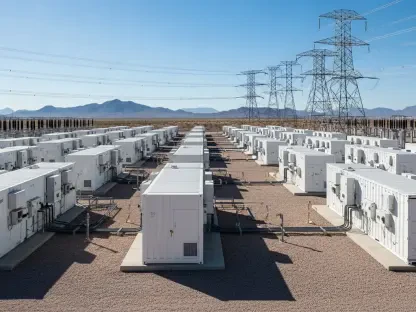

The scale of AI’s burgeoning energy needs is staggering, with forecasts pointing toward a fundamental reshaping of global electricity demand. The International Energy Agency projects that by 2026, the combined electricity consumption from data centers and AI will more than double, soaring beyond 1,000 terawatt-hours annually—an amount of energy comparable to the entire yearly usage of an industrialized nation like Japan. This is not a distant concern. In the United States alone, data centers accounted for 4.4% of the nation’s total electricity in 2023, and Department of Energy projections indicate this share could climb as high as 12% by 2028. This rate of expansion is akin to the emergence of an entirely new industrial sector in just a few years, placing an unforeseen and immense strain on energy grids that were not designed to accommodate such rapid, concentrated growth.

This explosive increase is rooted in the computational architecture of generative AI technologies like large language models and advanced video synthesizers. Training and operating these models requires vast clusters of power-hungry Graphics Processing Units (GPUs) that run continuously at high capacity. The disparity in energy use is stark at the query level; a single request to a generative AI model can consume up to ten times the electricity of a conventional web search. When multiplied by billions of daily AI interactions, the cumulative energy draw becomes monumental. This intense activity also generates a significant amount of heat, necessitating massive, energy-intensive cooling systems. These systems frequently use evaporative cooling methods that not only consume additional electricity but also millions of gallons of fresh water daily, adding a severe strain on another vital resource, especially in water-scarce regions.

A Collision Course with Climate Reality

The escalating demand for power carries profound environmental implications that threaten to undermine global climate targets. Data centers are already responsible for approximately 1% of global CO2 emissions, a figure set to climb sharply. A concerning analysis from the MIT Technology Review revealed that the power used by these facilities has a carbon intensity that is, on average, 48% higher than the U.S. national average. This is largely because their critical need for constant, uninterrupted power often forces them to rely on fossil fuels as a backup when renewable energy sources are intermittent or unavailable. This dependence on carbon-intensive energy to ensure uptime creates a direct conflict with decarbonization goals, complicating the transition to a cleaner energy future and potentially negating progress made in other sectors.

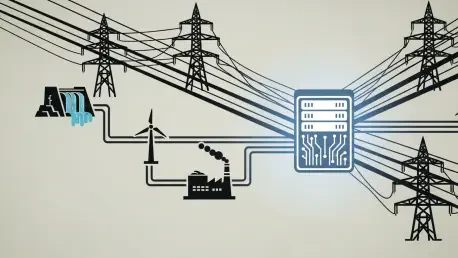

Beyond direct emissions, this surge is placing immense strain on national power grids, which are ill-equipped to handle such rapid and localized increases in demand. In response, the technology industry is making colossal investments to secure its energy future, signaling a major shift in corporate strategy. Microsoft has committed over $50 billion to new AI infrastructure, while Meta is actively exploring unconventional solutions like nuclear power to guarantee a stable supply for its operations. Perhaps most indicative of the scale of this new reality is the “Stargate” project, an OpenAI-backed initiative to construct a series of mega-data centers projected to consume more electricity than some U.S. states. These actions signal an industry preparing for a future where access to energy has become a primary competitive battleground, raising critical questions about resource allocation and public infrastructure stability.

A Paradigm Shift for a New Digital Age

A crucial paradox emerged from this challenge: the very technology driving the energy crisis also held the potential to be a powerful tool for mitigation. While AI’s development created a significant energy burden, experts cautiously believed it could also unlock new efficiencies across society by optimizing energy grids, streamlining supply chains, and accelerating the development of clean technologies. This created a “race between burden and benefit,” where the ultimate net impact of AI on the environment remained uncertain. To navigate this complex landscape, a multi-faceted approach involving both industry innovation and government regulation became necessary. Proposed strategies included mandating that tech companies pair new data centers with dedicated low-carbon power sources, a solution some companies began to explore, though its scalability remained a bottleneck.

The overarching consensus was that a fundamental paradigm shift was required to align the trajectory of AI with sustainable energy use. It became clear that artificial intelligence was no longer an abstract digital concept but a tangible force with a massive and rapidly expanding physical footprint. To harness its benefits without jeopardizing climate goals, a concerted and strategic effort proved essential. Industry leaders had to evolve their metrics of success beyond model size and performance to include power budgets and carbon footprints as core design considerations. In parallel, governments prioritized the expansion of flexible, clean energy grids and fostered innovation in green technologies. How we navigated this new reality ultimately determined the sustainability of the next technological era.