Artificial intelligence (AI) systems have become indispensable in our daily lives, powering everything from search engines to voice assistants. Despite their benefits, AI applications, especially large language models (LLMs), come with a significant drawback: their overwhelming energy consumption. In Germany alone, data centers consumed 16 billion kilowatt-hours (kWh) of energy in 2020, with projections estimating consumption to rise to 22 billion kWh by 2025. This upward trend in energy use underscores the urgent need for more energy-efficient AI technologies.

A New Dawn in AI Energy Efficiency

Revolutionizing Neural Network Training

Researchers at the Technical University of Munich (TUM), led by Professor Felix Dietrich, have pioneered a revolutionary method aimed at drastically reducing energy consumption during AI training. Unlike the traditional iterative methods that require intensive computations to determine parameters between neural network nodes, this new approach leverages probabilistic techniques. By focusing on critical points within training data, where rapid and significant changes happen, they can achieve a dynamic system that conserves energy while maintaining high accuracy.

The innovative method has been presented at the prestigious Neural Information Processing Systems conference (NeurIPS 2024). It boasts of slashing the training time of neural networks by up to 100 times compared to existing methods. This dramatic reduction in training duration translates to significant energy savings, tackling a major pain point in the current landscape of AI technology. Dietrich and his team’s approach has far-reaching implications, not just for AI, but for any field that relies on dynamic systems for predictions, including climate modeling and financial forecasting.

The Role of Probabilistic Parameter Selection

The core of TUM’s breakthrough lies in its probabilistic parameter selection technique. Rather than exhaustively computing parameter values through iterative cycles, this method targets key data points, enabling quicker convergence on optimal settings with far less energy input. This targeted approach is akin to finding shortcuts in a complex maze, allowing the network to reach its learning objectives more swiftly and efficiently.

While traditional neural network training processes involve numerous iterations with high computational loads, TUM’s technique focuses computational efforts on data segments that exhibit the most significant variability. By prioritizing these critical points, the probabilistic method can derive accurate parameter values without the exhaustive computational strain typical of conventional methods. This efficiency leap is accompanied by another significant advantage – it maintains the robustness and accuracy of predictions, crucial for applications requiring precision.

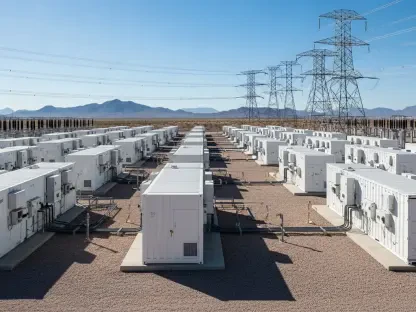

Addressing the Growing Energy Demands

Implications for Data Centers and Infrastructure

The ever-growing capabilities of AI applications have led to an inevitable rise in energy consumption, particularly within data centers. These facilities, which house the massive computational power required for AI, are becoming critical nodes in our technological ecosystem. However, the environmental footprint of this energy usage is a growing concern. The new probabilistic training method developed by TUM researchers holds promise for mitigating this impact significantly.

Reducing energy demands for AI training could have a cascading effect. If data centers can cut back on the energy required to train neural networks, it would not only lower operational costs but also reduce the overall carbon footprint. This is increasingly important as the tech industry faces mounting pressure to adopt greener, more sustainable practices. Additionally, this innovation could make AI technologies more accessible, as reduced energy costs translate to lower barriers to entry for smaller enterprises and startups.

Broadening the Reach of AI Applications

Artificial intelligence (AI) systems have become an integral part of our daily lives, driving everything from search engines to voice assistants. While their benefits are vast, AI applications, particularly large language models (LLMs), come with a notable downside: their immense energy consumption. For instance, data centers in Germany consumed 16 billion kilowatt-hours (kWh) of energy in 2020 alone. According to estimates, this consumption is expected to surge to 22 billion kWh by 2025. This trend highlights the pressing need for advancements in energy-efficient AI technologies to mitigate the environmental impact. Such progress is essential, not just in Germany but globally, as the demand for AI continues to escalate, affecting power grids and increasing carbon footprints. As AI technologies evolve and become more prevalent, finding ways to reduce their energy usage will be crucial for sustainable development. This calls for collaborative efforts among researchers, engineers, and policymakers to innovate and implement greener solutions for AI.